Below is my contribution for the recent collection published in Antipode on Algorithmic Governance.

Policing the Future City: Robotic Being-in-the-World

Sensor Prison

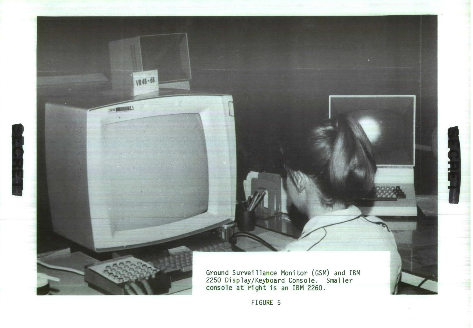

Ripe was the time for a revolution in police surveillance. The Vietnam War served as a laboratory for cybernetic experiments in the management of state power and violence. During the 1960s, the US Department of Defense began to automate warfare with the aid of computer algorithms. What became known as the “electronic battlefield” was a networked system of acoustic and seismic sensors, airplanes, and IBM super-computers that detected enemy movements in the forest and automated the US military’s kill chain (Dickson 2012). This vaulted apparatus of Technowar (Gibson 2000) revolutionized how the US military conducted the age-old art of killing. By the end of the conflict, the electronic battlefield was the object of fervid speculation, excitement, and anxiety. In a 1975 article, The New York Times prophesized: “Wars fought by planes without pilots. . . Guns that select their own targets. Missiles that read maps. Self-operated torpedoes on the ocean floor. Laser cannons capable of knocking airplanes out of the sky. Satellite battles on the other side of the moon” (Stanford 1975).

Figure 1: A U.S. computational surveillance centre in Thailand monitored ground sensor information during the Vietnam War. Photo credit: Project CHECO Report, Igloo White, January 1970- September 1971, U.S. Air Force.

Decades later, wars are now fought by planes without (onboard) pilots, although lunar conflicts have yet to materialize. But the real revolution of the Vietnam War was always more insidious: during the 1970s, the electronic battlefield snaked back to the homeland. Sensor technology was used by domestic law enforcement for policing, prisons, and border control. Under Operation Intercept—the anti-narcotics search and seizure operations that fueled President Nixon’s war on drugs—the US-Mexico border was converted into a testing ground for the domestication of the electronic battlefield. Buried seismic sensors were deployed by Border Patrol in the summer of 1970. Only the imagination limited how the electronic battlefield could be used for policing.

In 1971, Joseph Meyer, a US engineer who worked for the Air Force and National Security Agency (NSA), published his vision for a Crime Deterrent Transponder System. This futuristic blueprint detailed a new method for policing crime in the city: attaching millions of American parolees, recidivists, and bailees with small radio transponders. These would continually broadcast the location of tagged individuals to police sensors, creating an immersive public surveillance network. “As a consequence, the system of confining criminals in prisons and jails, to punish them or prevent them from committing further crimes, can be replaced by an electronic surveillance and command-control system to make crime pointless” (Meyer 1971: 2). Replacing carceral enclosures, the city itself would be reengineered into a boundless radio prison.

Meyer’s city of sensors would envelop “the criminal with a kind of externalized conscience—an electronic substitute for the social conditioning, group pressures, and inner motivation which most of the society lives with” (Meyer 1971: 17). Decades later, reality has caught up with Meyer’s design: police regularly use GPS devices on convicted criminals, sealing them inside an externalized conscience. More generally, the electronic battlefield, born in the mud and blood of Vietnam, has seeped into the bedrock of the modern smart city—which now seeks to sense and track our intimate mobilities. Algorithmic forms of governance have revolutionized who watches us and how we are watched.

Deterritorializing Algorithmic Policing

The algorithm, defined broadly “as both technical process and synecdoche for ever more complex and opaque socio-technical assemblages” (Amoore and Raley 2017: 3), has shifted the conditions of possibility for mass surveillance in our dense technical environments, enchanting the anonymous surfaces of the city and the intimate interfaces of habit. To extend Meyer’s turn of phrase, the algorithm not only produces an externalized conscience, but also, an externalized consciousness. The algorithm performs something of an electro-neurological continuum—a univocity of being in which calculation and thinking, consciousness and conscience, cause and effect, the biological and digital, and proximity and distance collapse into uncertain circuits.

Inwards, ever inwards, trickles the flow of who we are into distant clouds (Amoore 2016). The mass centralization of (big) data performed by the algorithm is inseparable from the technological prostheses that cocoon and extend human subjectivity: cell phones, automatic license plate readers, credit cards, facial recognition technologies, computers, and good old-fashioned CCTV. These background apparatuses perform an ambient, territorial intelligence: sending data onwards, altering (non)human mobilities, opening and closing, archiving and cross-referencing, enabling and constricting. The footprints of our lives echo in anonymous government buildings and shiny corporate hives.

For most of its early history, the algorithm was entombed in static shells, a ghost in the machine, patiently watching. Now, no longer: escaping from remote clouds, the algorithm has discovered new robotic bodies to enchant and awaken. Released from the background, liberated from inertia, algorithms are emancipating technics in new lines of flight. In an era of deep learning and swarm intelligence, the algorithm is enabling the deterritorialization of multiple vectors of algorithmic governance. And pivotal to this process is the robot, which fuses sensors, algorithms, and motors together. Algorithms are translating state power into not only more intelligent technics (which has always been their function) but perhaps more significantly, mobile technics.

Rather than enabling, restricting, and conditioning other (non)human bodies, algorithms now find themselves inside of robots capable of conditioning the world directly themselves. The question then becomes: what is at stake when self-learning algorithms propel robots that can move, sense, think, act, and react in the social spaces of human coexistence? Does the autonomous robot, big or small, materialize new modes of algorithmic being-in-the-world?

Robotic Being-in-the-World

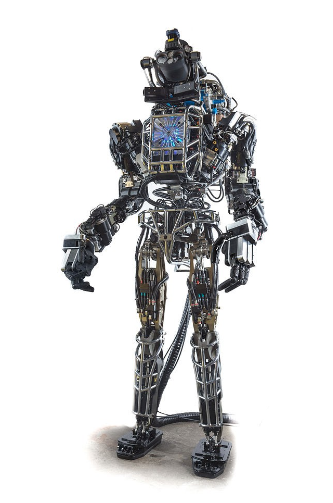

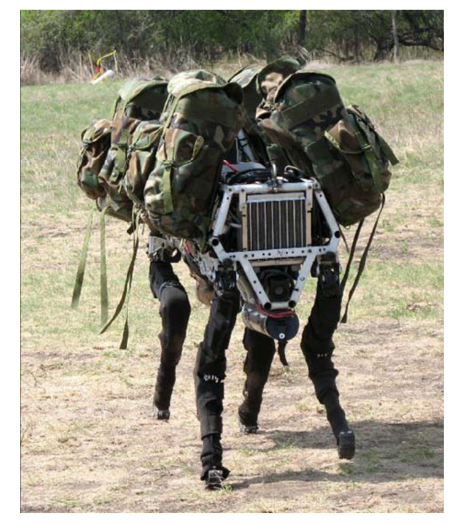

Robots have long produced disruptive geographies. Yet autonomous robotics—as opposed to automatic robots complicate the who, the what, and the how of these spatialities and temporalities. Whether a swarm of micro drones flying with advanced sense-and-avoid algorithms, or Boston Dynamics’ BigDog, an advanced four-legged ground robot that mimics the gait of a dog, these kinds of robots pose interesting problematics. By being actors very much in the midst of the world— alongside us in physical co-presence, our alien coexistents—such robots will be productive of space-times that extend and rework current understanding of algorithmic governance. Indeed, consider the Pentagon-financed Atlas. The future uses for this humanoid robot range from aiding humanity in natural disasters to caring for the elderly. The New York Times described Atlas as “a striking example of how computers are beginning to grow legs and move around in the physical world.”

Figure 2: First-generation Atlas, a humanoid robot created by DARPA and Boston Dynamics. Image credit: DARPA (2013), available at Wikimedia Commons.

Algorithms, of course, are always-already worldly. They are embodied (Wilcox 2017), performative, world-making forces, existing across the interfaces of human and nonhuman. As Amoore and Raley (2017:5) write, “it is not merely that algorithms are applied as technological solutions to security problems, but that they filter, expand, flatten, reduce, dissipate and amplify what can be rendered of a world to be secured.” Accordingly, rather than ask the “how” of the algorithm, we must also ask: “what kinds of perceptions and calculations of the world, what kinds of geographies, become possible” (Amoore 2016:9). Understanding the worldliness of algorithms is to view them as forces embedded in the flesh, texture, steel, stone, and undulating atmospheres of our more-than-human co-existence.

The military applications for robotics are already vast. Drone warfare has installed remote power topologies that collapse human pilots with targets thousands of miles away (Shaw 2016). Future autonomous drones (such as the Anglo-French Unmanned Combat Air System) will collapse these targets in entirely robotic topologies, materializing an electronic battlefield in which humans are on the loop, but not necessarily in the loop. This has already contributed to growing anxiety surrounding the rise of so-called killer robots.

Figure 3: BigDog quadruped robot, developed by Boston Dynamics with funding from DARPA. Image credit: DARPA (2012), available at Wikimedia Commons.

Beyond the battlefield, the potential uses of autonomous robots for policing—and predictive policing—is similarly wide-ranging. Law enforcement in the US and UK already use algorithmic systems to direct police officers to geolocated crime “hotspots.” These programs model vast sums of data to seize the future. And advances in AI are priming—but not determining—the conditions for robots themselves to occupy algorithmically generated hotspots. Whether in the form of flying swarms or humanoid robots, this artificial being-in-the-world complicates the consciousness and conscience of police power.

Closing Thoughts

Decades after the electronic battlefield introduced ground sensors and computer algorithms into the orbits of state violence, advanced robots now collapse sensors, algorithms, and motors inside a single animate shell. The robotic condition, as uncertain as it remains, will surely require us to consider the impact of these deterritorialized technics upon the conduct of state power. While the spaces of algorithmic authority are currently located in cloud-based data banks that “defy conventional territorial jurisdictions” (Amoore and Raley 2016: 4), how might autonomous robots—as “walking computers”—disclose new algorithmic sites, subjects, and being-in-the-world?

Acknowledgments

I would like to thank Jeremy Crampton and Andrea Miller for organizing the 2016 AAG panel (Algorithmic Governance) that this paper is based on. I’d also like to thank the ESRC.

References

- L Amoore (2016) Cloud geographies: computing, data, sovereignty. Progress in Human Geography, online first, doi:10.1177/0309132516662147.

- L Amoore and R Raley (2017) Securing with algorithms: knowledge, decision, sovereignty. Security Dialogue 48(1): 3-10.

- P Dickson (2012) The Electronic Battlefield. Takoma Park: FoxAcre Press.

- J W Gibson (2000) The Perfect War: Technowar in Vietnam (2nd ed.). New York: Atlantic Monthly Press.

- J A Meyer (1971) Crime deterrent transponder system. IEEE Transactions on Aerospace and Electronic Systems 7(1):2-22.

- Shaw I G R (2016) Predator Empire: Drone Warfare and Full Spectrum Dominance. Minneapolis: University of Minnesota Press

- P Stanford (1975) The automated battlefield. New York Times, February 23.

- L Wilcox (2017) Embodying algorithmic war: gender, race, and the posthuman in drone warfare. Security Dialogue 48(1): 11-28.